Quick-Start Guide

You've just signed up for our free trial. We are already crawling your website to fill your search with content. At this point you might be wondering, what do I do now? How do I get my new search up and running on my site?

We have put together this quick-start guide to help you do just that in 3 steps.

1. Check your indexed site(s)

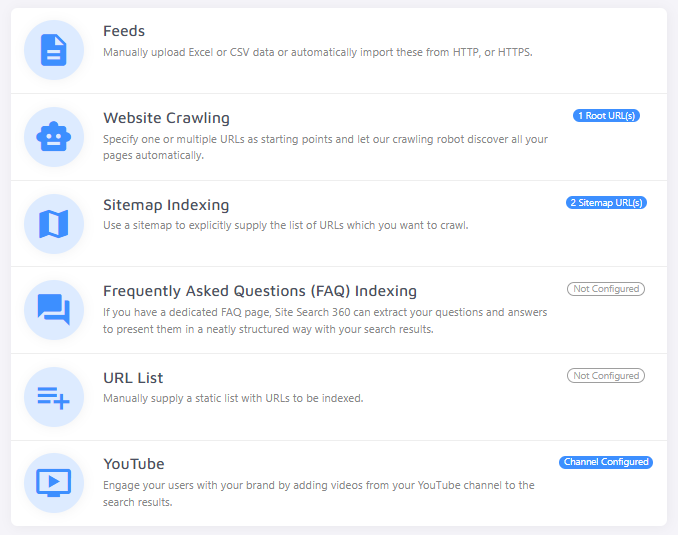

The quality of your search will depend on what our crawler finds and indexes. For this reason, the first stop on our journey is the Data Sources section of the Control Panel.

Start by navigating to Website Crawling. Here you can double check that we are crawling the correct website(s).

Pro tip: consider providing a sitemap under Sitemap Indexing instead of your root URL. In addition to ensuring faster and more efficient crawling of your site, it's an integral part of good SEO practices.

Once you are sure we are crawling the right site or sitemap, you are ready for step 2!

Note for e-commerce: if you have an online shop with a product feed, you can upload a feed or provide a feed URL under Feeds. If you do not see a Feeds section, please sign up for a trial of our e-commerce search or contact support.

2. Improve your indexed content

On the Dashboard, you will see how many pages (and possibly products) are in your index.

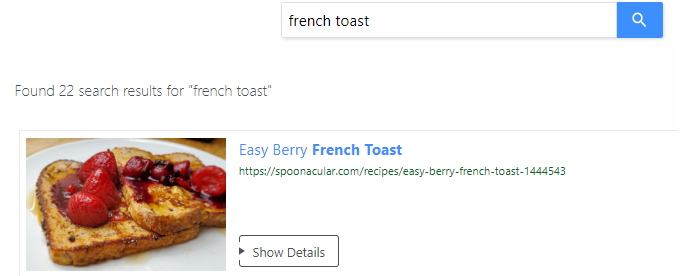

If the crawler has made enough progress, go ahead and head over to the Search Preview and test out a few queries. Don't worry: testing the search here does not affect your quotas.

If you are happy with your search results, you can already move to step 3.

If you find your search includes unwanted pages or there are some search results that do not look like you expect, let's do a bit of finetuning first.

Blacklisting and Whitelisting

To make sure your search only finds the pages you want, you can use black- and whitelisting. Blacklisting excludes pages from your search index, and also prevents the crawler from following links on those pages. Whitelisting tells the crawler that only pages fitting the whitelisting rules should be added to the search. Both should be used carefully since they have a big impact on your search index.

You can blacklist or whitelist using URL patterns, XPath, and/or CSS selectors. For example, you might blacklist the URL pattern /tag/ if pages like blog.mysite.com/tag/breakfast clutter your index and do not belong in your search.

Alternatively, you can set up a no-index URL pattern instead. This means the crawler will not index pages with the URL pattern /tag/, but it will still follow links to potentially relevant pages.

Not sure whether your index needs improving? You can review your indexed pages under Control Panel > Index to get an idea where you might need to add blacklisting and whitelisting rules.

Possible Issues

If you have problems with duplicate URLs (e.g. mysite.com and mysite.com/ or mysite.com/page and mysite.com/page?utm_campaign=google), please refer to our post on cleaning up duplicates.

Should you find errors in your index, refer to our documentation on fixing common errors.

Adjusting Titles, Images, Content

Normally, the Site Search 360 crawler pulls the most relevant content for your search result titles, images, and descriptions automatically. It could happen, though, that the crawler does not find the right content on its own (like the image in the screenshot below) due to a tricky site structure.

No worries—you can tell the crawler exactly what you want under Data Structuring > Content Extraction. This does require some experience with XPaths or CSS selectors, but both are easy to learn. If you prefer CSS, just use the toggle in the top-right menu:

With XPath or CSS, you can define which information on your page should be used for the search result titles...

images...

...and content.

Common examples of site sections to exclude would be headers, footers, navigation, etc.

After making these changes, reindex your site and test your search again. If the search looks good, move on to step 3. If not, refine, reindex, and repeat until the search really shines!

3. Install your new search on your site

You're almost there! Head over to Design & Publish and select Start now.

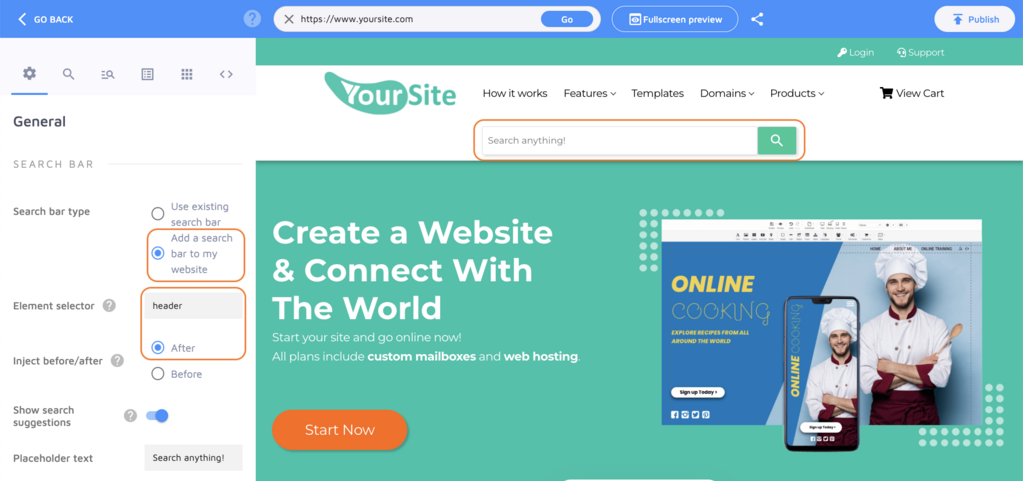

Now you will see some search customization options. Customizing the look and feel of your new searchwill really make it feel at home on your site. This guide to the Search Designer can help you navigate all the different possibilities.

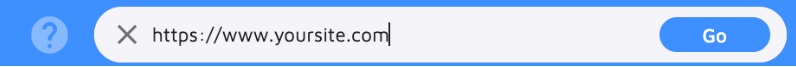

You can also decide whether to connect Site Search 360 to an existing search bar or add a new one. If you already have a search bar, try loading your website in the top bar and we'll detect it automatically:

In the event we can't detect it automatically, please provide the CSS selector pointing to your search bar.

If you don't have a search box on your site yet, you can insert the following code snippet wherever you want the box and search button to be displayed on your site, such as in your site header:

<section role="search" data-ss360="true">

<input type="search" id="searchBox" placeholder="Search…">

<button id="searchButton"></button>

</section>

You can also go for the "Add a search bar to my website" option and specify before or after which element on your site you'd like the search box to appear:

After this, you are just a JavaScript code snippet away from a powerful new search engine for your site!

Press "Publish" in the top-right corner to get your personalized code snippet, which will look something like this:

<script async src="https://js.sitesearch360.com/plugin/bundle/0000.js"></script>

Your personalized script should go before the closing </body> tag in your site template. Note: the last four digits in this snippet vary from project to project, so you must use your own line of code rather than paste the one from this guide to your website.

Next time you return to the Search Designer to tweak some settings, all you need to do to apply your changes is click "Publish" and wait a few minutes. No need to touch the code ever again.

Hoping for something even easier? You might be able to use one of our many plugins or extensions!

However you decide to install our search, we hope you and your visitors enjoy your improved search experience. Please do not hesitate to reach out with any questions or feedback.